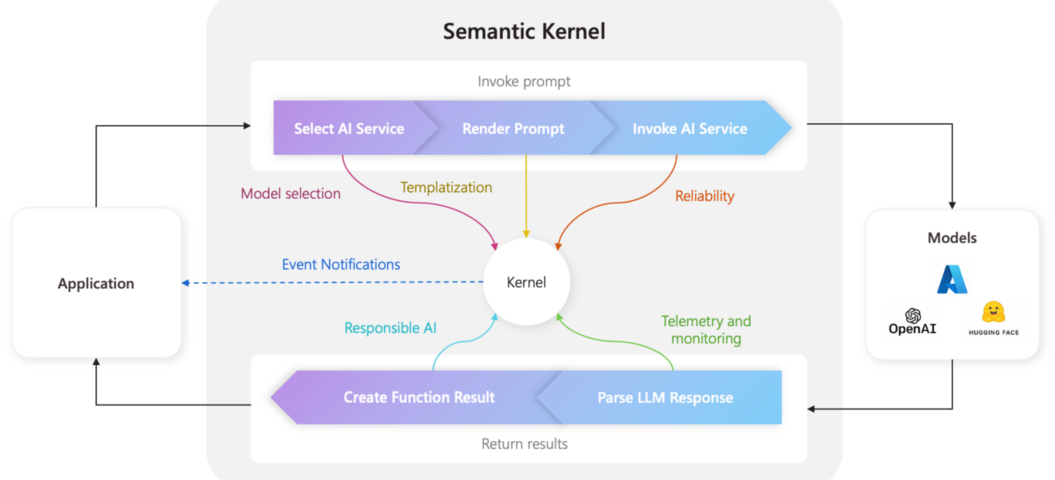

Unlocking Advanced AI Use Cases with Semantic Kernel

By Chrishmal Fernando

Since 2018, iTelaSoft has been at the forefront of developing conversational interfaces, well before the era of Generative AI. Our early projects included simple web-based virtual assistants as well as more complex use cases involving voice-based telephony applications and SMS-based real-time chat agents. During this time, Amazon Lex and Microsoft LUIS were the go-to tools for rapid development.

Find Semantic Kernel at, https://github.com/microsoft/semantic-kernel

At iTelaSoft, we’ve leveraged Semantic Kernel to develop a wide range of generative AI applications, from straightforward virtual assistants to complex conversation observers that invoke background actions based on the conversation state. Whether simple or intricate, Semantic Kernel provided us a blueprint for building scalable and reliable AI solutions.

Learn more at, https://learn.microsoft.com/en-us/semantic-kernel/overview/